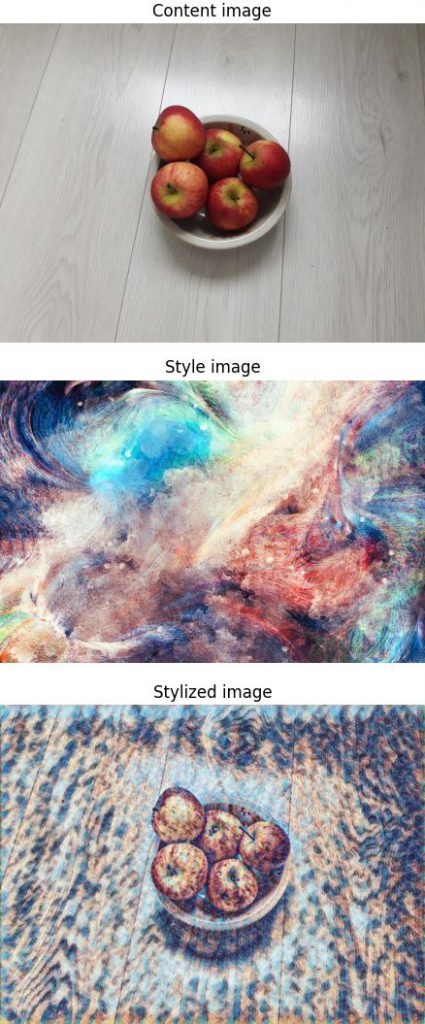

Neural style transfer (NST) is a process which adapts the style of one image to another image. NST uses two images: a content image and a style image. Images are blend together in order to create a new image that looks like the content image, but painted using colors of the style image. NST is commonly used to create artificial art from photographs.

This tutorial provides example how to use pre-trained NST model to create artificial art. We will use a model from TensorFlow Hub repository.

Using pip package manager, install tensorflow and tensorflow-hub from the command line.

pip install tensorflowpip install tensorflow-hubWe read and preprocess the images. For images decoding we use the tf.io.decode_image function which detects an image format (JPEG, PNG, BMP, or GIF) and converts the input bytes into a Tensor.

We resize the style image by using the tf.image.resize function. Recommended size of the style image is 256x256. This size was used to train the NST model. The content image can be any size.

The model requires that the shape of input will be [batch, height, width, channels]. We have images which shape is [height, width, channels]. We add an batch dimension by passing axis=0 to tf.expand_dims function.

To normalize data, we divide each pixel value by 255 to get a range of 0 to 1. It allows improving activation functions performance.

We use the hub.load function to load the TensorFlow Hub module, which downloads the neural style transfer model and caches it on the filesystem.

The output shape of the model is [batch, height, width, channels]. However, to display an image, the shape should to be [height, width, channels]. We can remove an batch dimension by using tf.squeeze function.

import tensorflow as tf

import tensorflow_hub as hub

import matplotlib.pyplot as plt

WIDTH, HEIGHT = (256, 256)

contentImgOriginal = tf.io.read_file('content.jpg')

contentImgOriginal = tf.io.decode_image(contentImgOriginal)

contentImg = tf.expand_dims(contentImgOriginal, axis=0) / 255

styleImgOriginal = tf.io.read_file('style.jpg')

styleImgOriginal = tf.io.decode_image(styleImgOriginal)

styleImg = tf.image.resize(styleImgOriginal, (WIDTH, HEIGHT))

styleImg = tf.expand_dims(styleImg, axis=0) / 255

url = 'https://tfhub.dev/google/magenta/arbitrary-image-stylization-v1-256/2'

hubModule = hub.load(url)

outputs = hubModule(tf.constant(contentImg), tf.constant(styleImg))

stylizedImg = tf.squeeze(outputs[0])

fig, (ax1, ax2, ax3) = plt.subplots(1, 3)

ax1.axis('off')

ax1.set_title('Content image')

ax1.imshow(contentImgOriginal)

ax2.axis('off')

ax2.set_title('Style image')

ax2.imshow(styleImgOriginal)

ax3.axis('off')

ax3.set_title('Stylized image')

ax3.imshow(stylizedImg)

plt.show()Finally, we display images.

Leave a Comment

Cancel reply